Up until now I thought I was past having to troubleshoot SSM connect issues. I pretty much always default to it over using SSH. My Terraform configs don't include SSH keys and I have no keypairs saved on my machine.

I have a terraform environment for studying that just deploys some basic foundational resources so I can test and experiment with other services:

- VPC

- 4 subnets, two public, two private

- 2 instances, one in public and one in private subnet

- An ALB in public subnet with the private instance as target

- The private subnet has no natgw or internet connectivity. The only access it has is through gateway endpoint to S3 and Interface Endpoint for SSM connectivity.

- The public subnet however has regular outbound access to internet through IGW

- None of the gateway or interface endpoints are associated with the public subnets

The issue

The last point is the core of this post. The VPC+2 DNS resolver will get those interface endpoint records added for the whole VPC whether there is a route table entry or association to the endpoints or not.

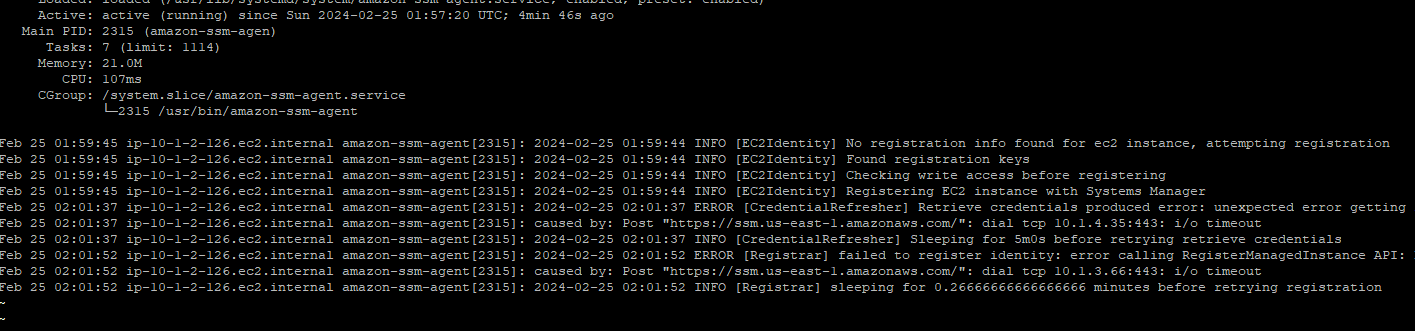

Here is what it will look like when you SSH to the instance and check on the amazonssm status and the two commands you can use to diagnose.

- systemctl status amazon-ssm-agent.service

- cat /var/log/amazon/ssm/errors.log

The solution

Fixing this in my environment involved associating the Gateway Endpoint with the public route tables and allowing traffic from the public EC2 Subnet to the endpoint associated with the Interface Endpoints.

You cannot fix it by making extra interface endpoints because the DNS entries already exist and are pointing to the interface endpoints in the private subnet. This is associated with the "Private_DNS" option on the interface endpoint.